Studio: NO GHOST

Client: Digital Catapult / Niantic

Main Collaborator: Studio Wayne McGregor

Role: Creative Technologist / Unity Developer

Year: 2023

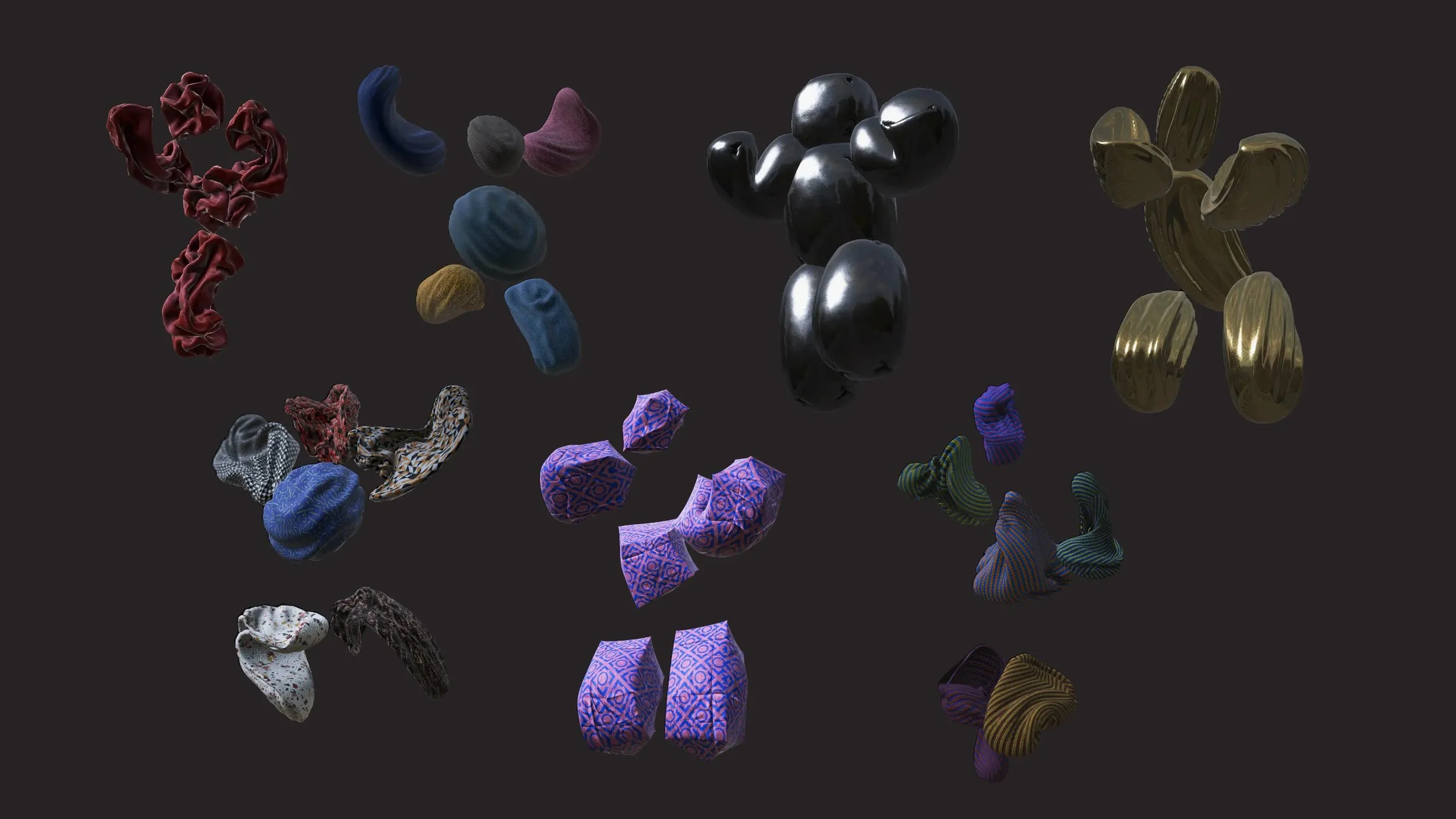

Ekhos is an augmented reality location based (AR) app that transforms the expressive movements of city dwellers into dynamic digital sculptures called “Ekhos”, bringing urban spaces to life.

Each ‘Ekho’ is a unique creation, reflecting the individuality of its contributor. By weaving personal motion into shared digital art, the app encourages deeper connections to our environment, inspires physical self-expression, and strengthens community bonds.

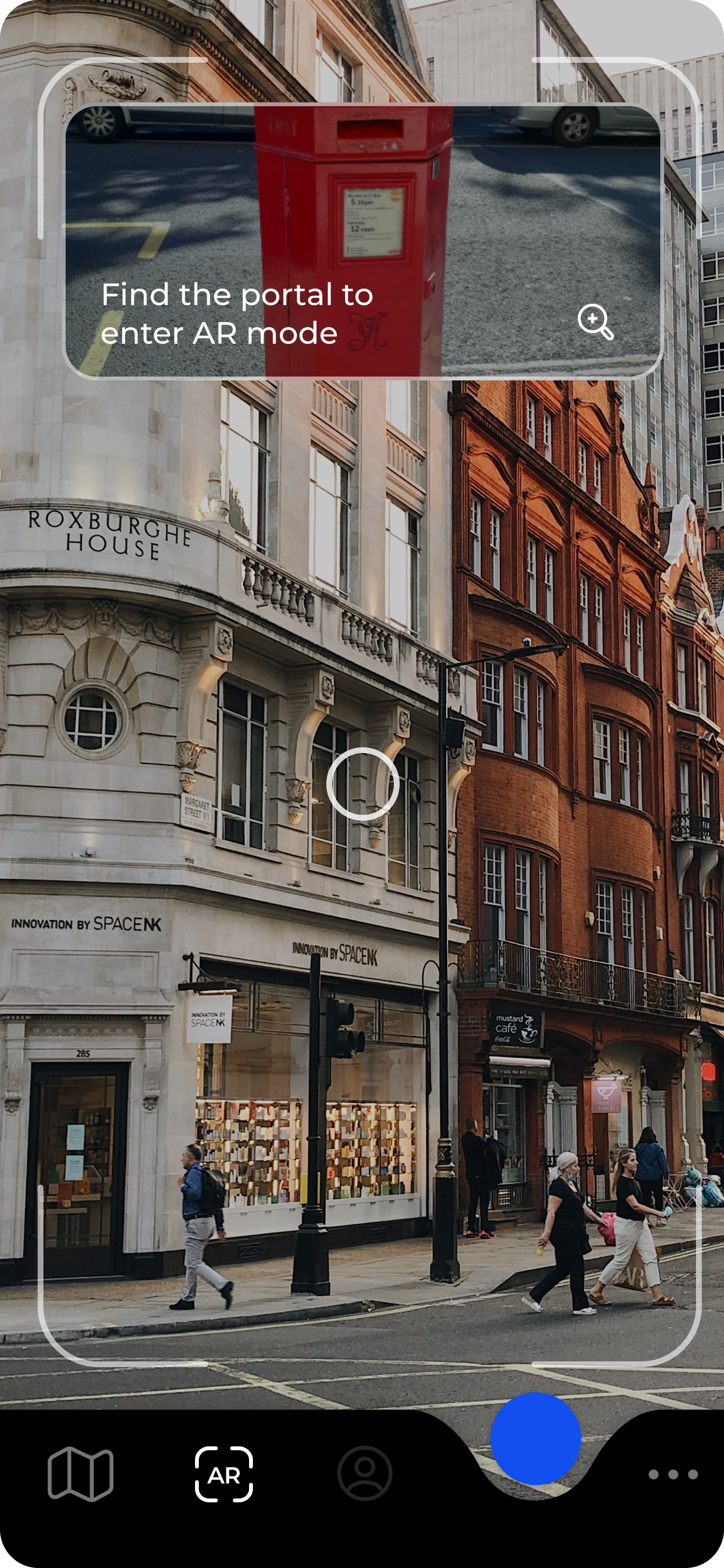

Community Based AR Game

Players navigate the city using a map to discover Ekhos, location-based AR beings anchored to real-world landmarks through the Niantic Lightship ARDK’s Visual Positioning System.

Upon reaching an Ekho’s location—whether near the London Eye or another urban site—players can scan the area to reveal the living digital sculpture. Using their phone, they contribute to the Ekho by tracing a movement in space, shaping its form, motion, and soundscape. Each interaction adds a unique layer to the Ekho’s evolving presence.

A universe born from the movement of the visitors

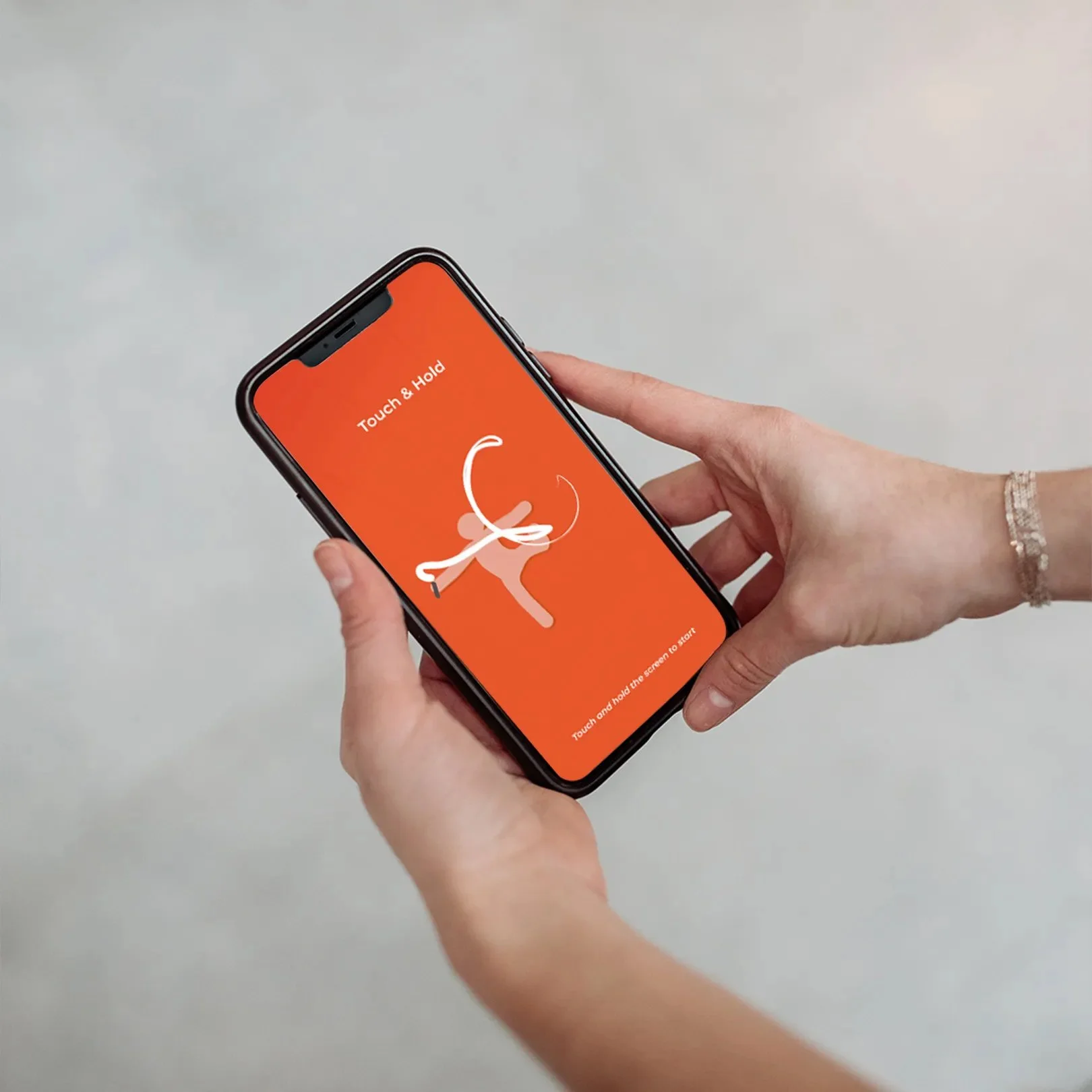

Leave Trace in the City

To contribute, players carve a physical line through space using their phone by drawing in the air with motion. This gesture becomes a unique movement signature.

A Unique Performance

Each contribution shapes the Ekho’s evolving form, influencing its appearance, motion, and sound. The Ekho responds in real time, performing your movement as part of its living choreography. No two traces are the same: every gesture becomes part of a collective, ever-changing performance within the city.

The Major Challenge: How can a line carved in space by the player — a simple spatial gesture — become a fully rigged digital performance?

From Gesture to Choreography

1- Mathematical Analysis

To translate simple user gestures into expressive movement, I suggested and organized a motion capture session with dancers from Studio Wayne McGregor. We partnered with Move.AI to capture full-body choreography without markers. I analysed the spatial line collected from the app looking at rhythm, frequency, curvature, and directionality. These features became keywords we used to brief the dancers.

2- Custom Choreography Motion Capture Library

Together with Wayne, we explored how those abstract qualities could be embodied physically. What does “edgy” look like in motion? How do you dance “flowy”? The dancers created short choreos based on those cues. This created a library of motion sequences

3- Procedural Animation using Motion Matching

After interpreting the user’s motion input, I experimented with an AI technique called motion matching typically used in games to blend walking and idle animations. I adapted this method to work with our choreography motion capture data, aiming to procedurally generate a unique full-body performance for each player input. This allowed every gesture to trigger a responsive, expressive dance.